vol. 33 (sep. 26 - oct. 10)

beep beep boop boop, happy monday-tuesday

Excited to share a story featuring a petition I helped write to the DOJ urging a Title VI review and enforcement push about federally funded purchases of ShotSpotter among other carceral technologies. Title VI bans "discrimination on the basis of race, color, or national origin in any program or activity that receives Federal funds or other Federal financial assistance" -- Title VI is a perfect vehicle to remedy some of the federally supported but racist algorithmic programs, and I’ve been working on this one with my colleagues for the last while!

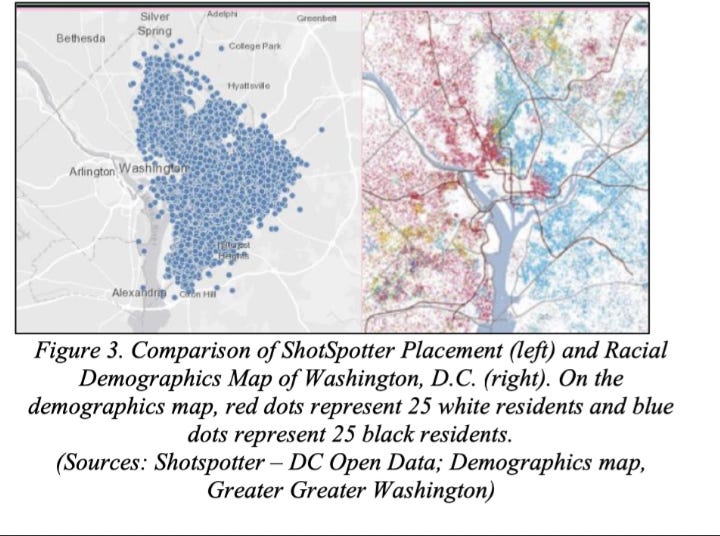

Shotspotter perpetuates and exacerbates historically discriminatory policing patterns. ShotSpotter sensors, in at least some cities that we've been able to get information about, are placed disproportionately in majority-minority communities, leading to higher "evidence" (selective data) of gunshots (loud noises) giving justification to increased policing. It often doesn't even work, which again, because of the placement of the sensors, gives the false impression that a given location is more dangerous, needs more policing, or primes police to go in to a situation aggressively - less than 10% of alerts led to evidence. Even when the sensors are placed in areas that are traditionally “high-crime,” that is a reflection of the police practices in that area historically. It does nothing to break the cycle, just keeps it going.(WIRED)

The ever-changing representations about security and privacy made by AI companies are about as reliable as my Giants Offensive Line :(. Google (seemingly accidentally) indexed results from people’s chats with their chatbot “Bard,” and included them in public google searches. (Fast Company)

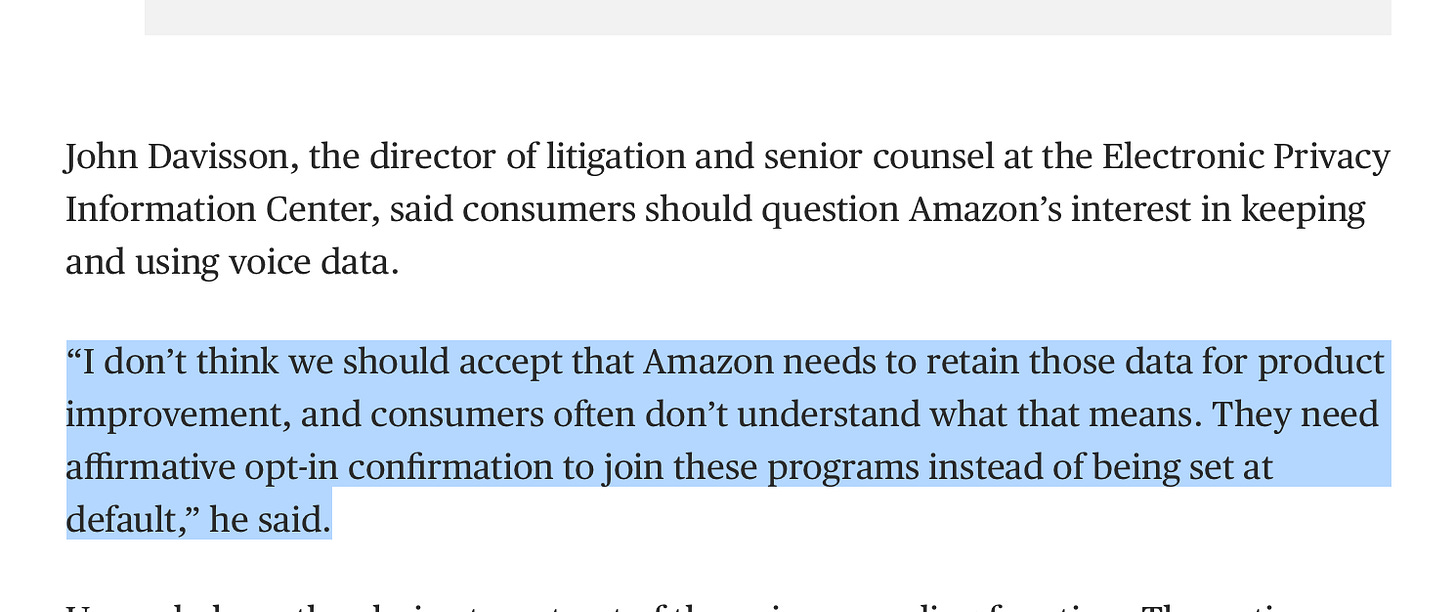

Amazon wants to use voice command data to train their Alexa AI. I won’t try to say it better than my colleague John: (NBC News)

In one of the major and sudden news cycles since consumer Generative AI tools like ChatGPT were released, that combined with pay-for-algorithmic-prioritization model that El*n M*sk instituted unsurprisingly is leading to a absolute disinformation dumpster fire. (WIRED)

Shortly after saying they would expand election integrity experts, X fired their election integrity team. It’s not worth explaining or taking down the owner’s reasoning for this, but it’s an absolute B.S. move and should put a fire under the ass of legislators before we get from bad to worse. (WIRED)

Definitely check out this great write-up an EXCELLENT event put on by the FTC that allowed creatives (writers, producers, voice actors, so many) to explain the precarious position they’re placed in and allowing them to voice their very specific and reasonable demands for AI regulation to restore some sort of power balance. Eventually I will clip the best parts and share them here, because these creators were so articulate, direct, and convincing. (VentureBeat)

One creator mentioned how impossible and insufficient the “opt out” that AI companies offer to people who want their content excluded from a training set for an AI model — like this one from OpenAI’s image generator. (MSN)

The NY state legislature banned artificially generated revenge porn. It’s a slam dunk move, but only a few states have done it. It’s important because some states define photograph, video, etc in a way that is either unclear or wouldn’t count if it was an AI-generated representation of someone nude. Regardless of whether its a real representation or a AI-generated one, it’s still a violation of the intent of revenge porn ban laws. (Bloomberg)

The NY state education commissioner banned facial recognition for schools after a statewide report showed ‘risks outweigh benefits.’ (AP)